Taking a step closer to the technology-driven future, Google in its new initiative plans on the creation of a system that will allow smart home device users to communicate with the devices through the aid of UI designed for wearables. Spotted by Android Headlines, this new technology by Google will be beyond the voice command system and will collaborate the Internet of Things devices with a pair of smart glasses.

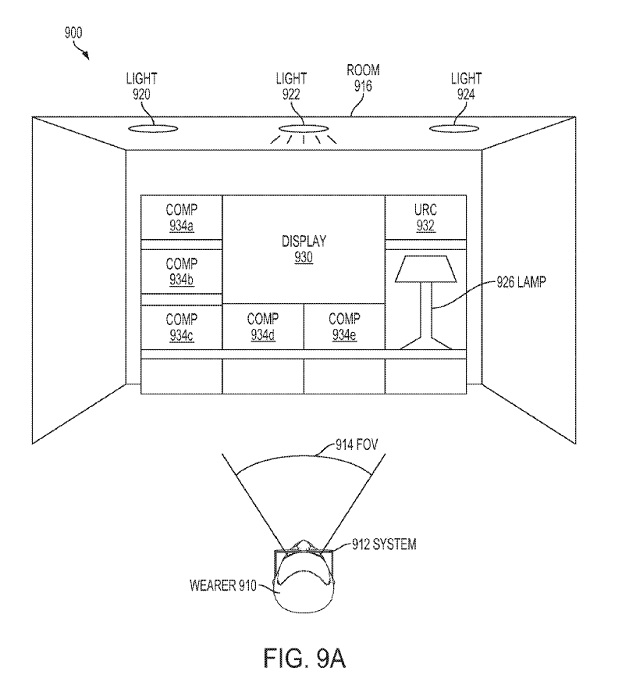

As per the patent, this new wearable will include a technology that will follow the gaze of the user and will record the movements of the wearer’s head motions. In the smart home devices, the devices interact with the user’s objects by the aid of machine vision and Artificial Intelligence and then stores the images of the identified objects in its device memory. After the storage of the information on the device, a menu is displayed along with a “How To” guide that shows the users the process to interact with the selections.

For instance, if the wearer looks at an already connected lamp, the device system would inform the users to move their head up or down to adjust the brightness of the lamp.

Although the technique is not entirely new as a similar concept was proposed by the Nokia last year at the International Broadcasting Convention, 2017. The idea of putting forth by Nokia was titled ‘Any Vision’ and it centered around the belief to bring television to any surface or display. Through the technology of ‘Any Vision’, Nokia proposed that this would provide media to follow the users anywhere and then the users can also play or pause as per their convenience, granting them access to smartphone-like features.

This design was patented by Google at the World Intellectual Property Organization (WIPO). Although Google has taken a similar approach, the results will be vastly different because of its dependence on smart glasses and a heads-up display. That adds a level of automation and interaction that wasn’t seen in Nokia’s proposed solution due to its dependence on touch inputs and hand-motion for directing which display or surface the media should move to.